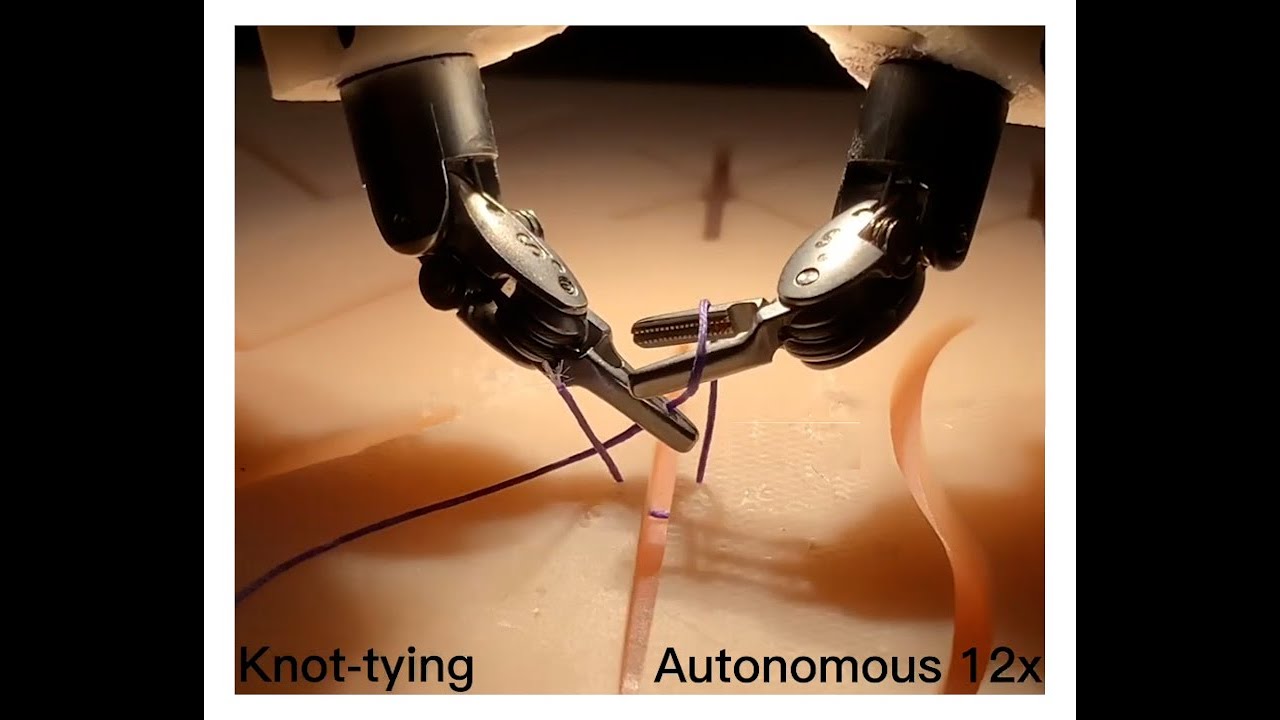

Watching old episodes of ER won’t make you a doctor, but watching videos may be all the training a robotic surgeon’s AI brain needs to sew you up after a procedure. Researchers at Johns Hopkins University and Stanford University have published a new paper showing off a surgical robot as capable as a human in carrying out some procedures after simply watching humans do so.

The research team tested their idea with the popular da Vinci Surgical System, which is often used for non-invasive surgery. Programming robots usually requires manually inputting every movement that you want them to make. The researchers bypassed this using imitation learning, a technique that implanted human-level surgical skills in the robots by letting them observe how humans do it.

The researchers put together hundreds of videos recorded from wrist-mounted cameras demonstrating how human doctors do three particular tasks: needle manipulation, tissue lifting, and suturing. The researchers essentially used the kind of training ChatGPT and other AI models use, but instead of text, the model absorbed information about the way human hands and the tools they are holding move. This kinematic data essentially turns movement into math the model can apply to carry out the procedures upon request. After watching the videos, the AI model could use the da Vinci platform to mimic the same techniques. It’s not too dissimilar from how Google is experimenting with teaching AI-powered robots to navigate spaces and complete tasks by showing them videos.

Surgical AI

“It’s really magical to have this model and all we do is feed it camera input and it can…

Read full post on Tech Radar

Discover more from Technical Master - Gadgets Reviews, Guides and Gaming News

Subscribe to get the latest posts sent to your email.