- Nvidia and xAI collaborate on Colossus development

- xAI has markedly cut down ‘flow collisions’ during AI model training

- Spectrum-X has been crucial in training the Grok AI model family

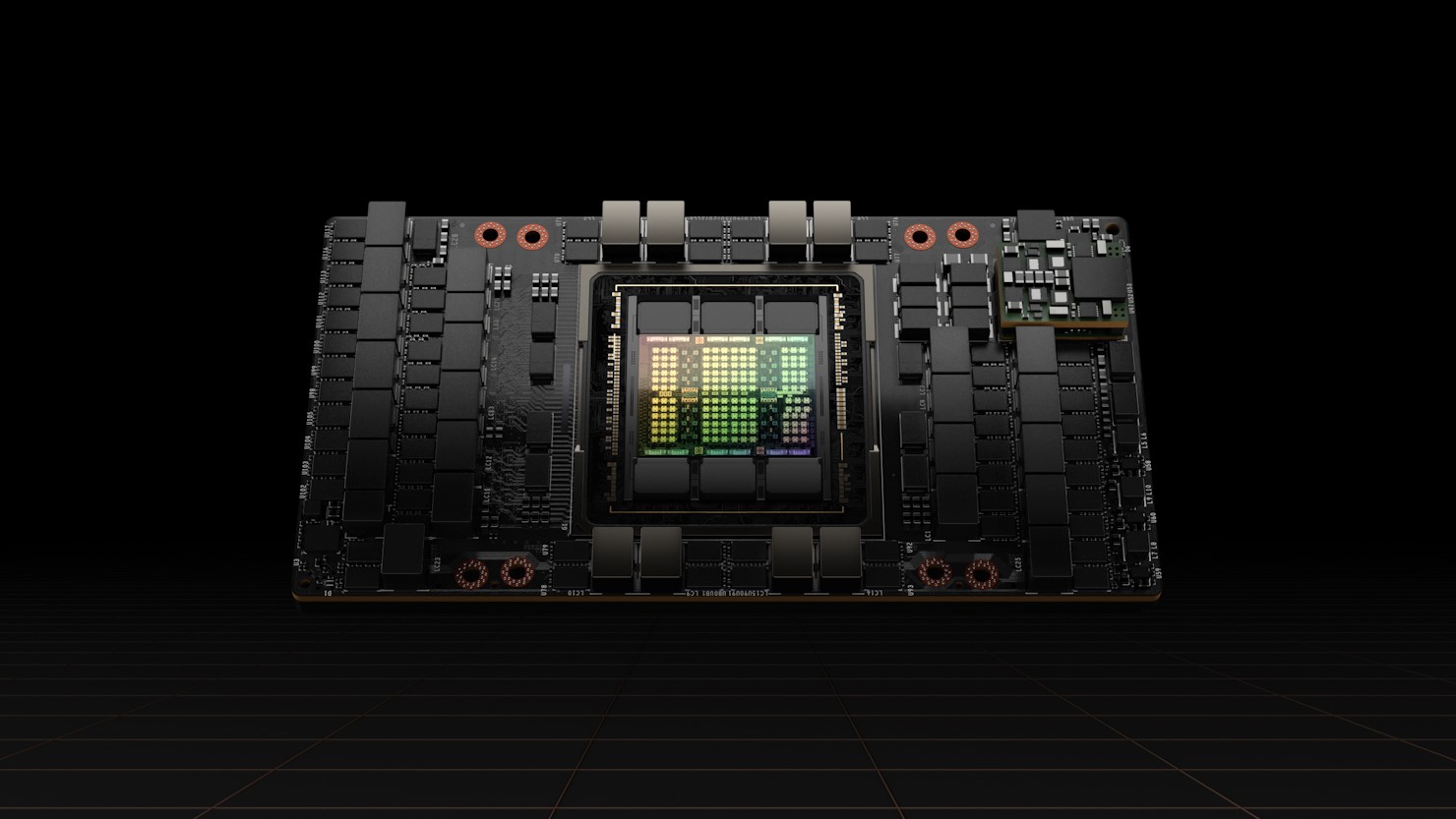

Nvidia has shed light on how xAI’s ‘Colossus’ supercomputer cluster can keep a handle on 100,000 Hopper GPUs – and it’s all down to using the chipmaker’s Spectrum-X Ethernet networking platform.

Spectrum-X, the company revealed, is designed to provide massive performance capabilities to multi-tenant, hyperscale AI factories using its Remote Directory Memory Access (RDMA) network.

The platform has been deployed at Colossus, the world’s largest AI supercomputer, since its inception. The Elon Musk-owned firm has been using the cluster to train its Grok series of large language models (LLMs), which power the chatbots offered to X users.

The facility was built in collaboration with Nvidia in just 122 days, and xAI is currently in the process of expanding it, with plans to deploy a total of 200,000 Nvidia Hopper GPUs.

Training Grok takes serious firepower

The Grok AI models are extremely large, with Grok-1 measuring in as 314 billion parameters and Grok-2 outperforming Claude 3.5 Sonnet and GPT-4 Turbo at the time of launch in August.

Naturally, training these models requires significant network performance. Using Nvidia’s Spectrum-X platform, xAI recorded zero application legacy degradation or packet loss as a result of ‘flow collisions’, or bottlenecks within AI networking paths.

xAI revealed it has been able to maintain 95% data throughput enabled by Spectrum-X’s congestion control capabilities. The company added this level of performance cannot be delivered at this scale via…

Read full post on Tech Radar

Discover more from Technical Master - Gadgets Reviews, Guides and Gaming News

Subscribe to get the latest posts sent to your email.