NVIDIA has shared the first benchmarks of its Blackwell GPUs in MLPerf v4.1 AI Training workloads, delivering a 2.2x gain over Hopper.

NVIDIA Demolishes The Competition With Blackwell GPUs, Delivering Up To A 2.2x Gain In MLPerf v4.1 AI Training Benchmarks Versus Hopper

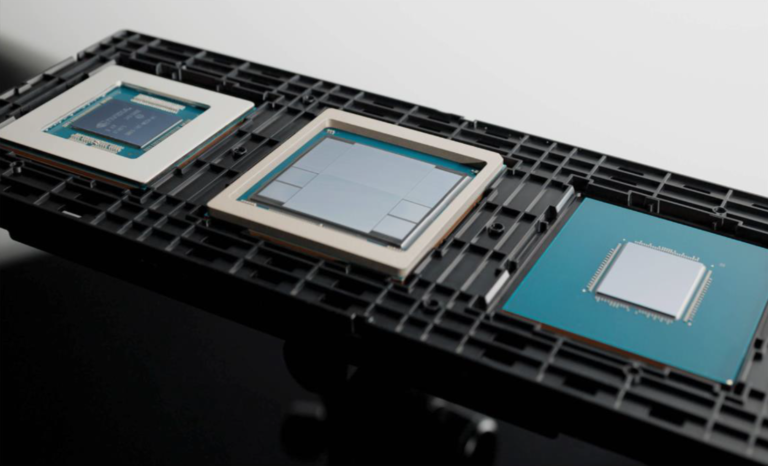

Back in August, NVIDIA’s Blackwell made its debut in the MLPerf v4.1 AI Inference benchmarks, showcasing strong performance uplifts versus the last-gen Hopper chips and also against the competition. Today, NVIDIA is sharing the first Blackwell benchmarks in the MLPerf v4.1 AI Training workloads which showcase stunning results.

NVIDIA states that the demand for compute in the AI segment is increasing at an exponential scale with the launch of new models. This requires both accelerated training and inference abilities. The inference workloads were benchmarked a few months ago, & it’s time to look at the training tests that encompass the same set of workloads, such as:

- Llama 2 70B (LLM Fine-Tuning)

- Stable Diffusion (Text-to-Image)

- DLRMv2 (Recommender)

- BERT (NLP)

- RetinaNet (Object Detection)

- GPT-3 175B (LLM Pre-Training)

- R-GAT (Graph Neural Network)

These are some of the most popular and diverse use cases to evaluate the AI Training performance of AI accelerators and these are all evaluated in the MLPerf Training 4.1 tests. These workloads are very accurate when it comes to time-to-train time (in minutes) for the required evaluation and have 125+ MLCommons members in the consortium & affiliates backing them up to align the tests with the market.

Starting first with Hopper,…

Read full on Wccftech

Discover more from Technical Master - Gadgets Reviews, Guides and Gaming News

Subscribe to get the latest posts sent to your email.