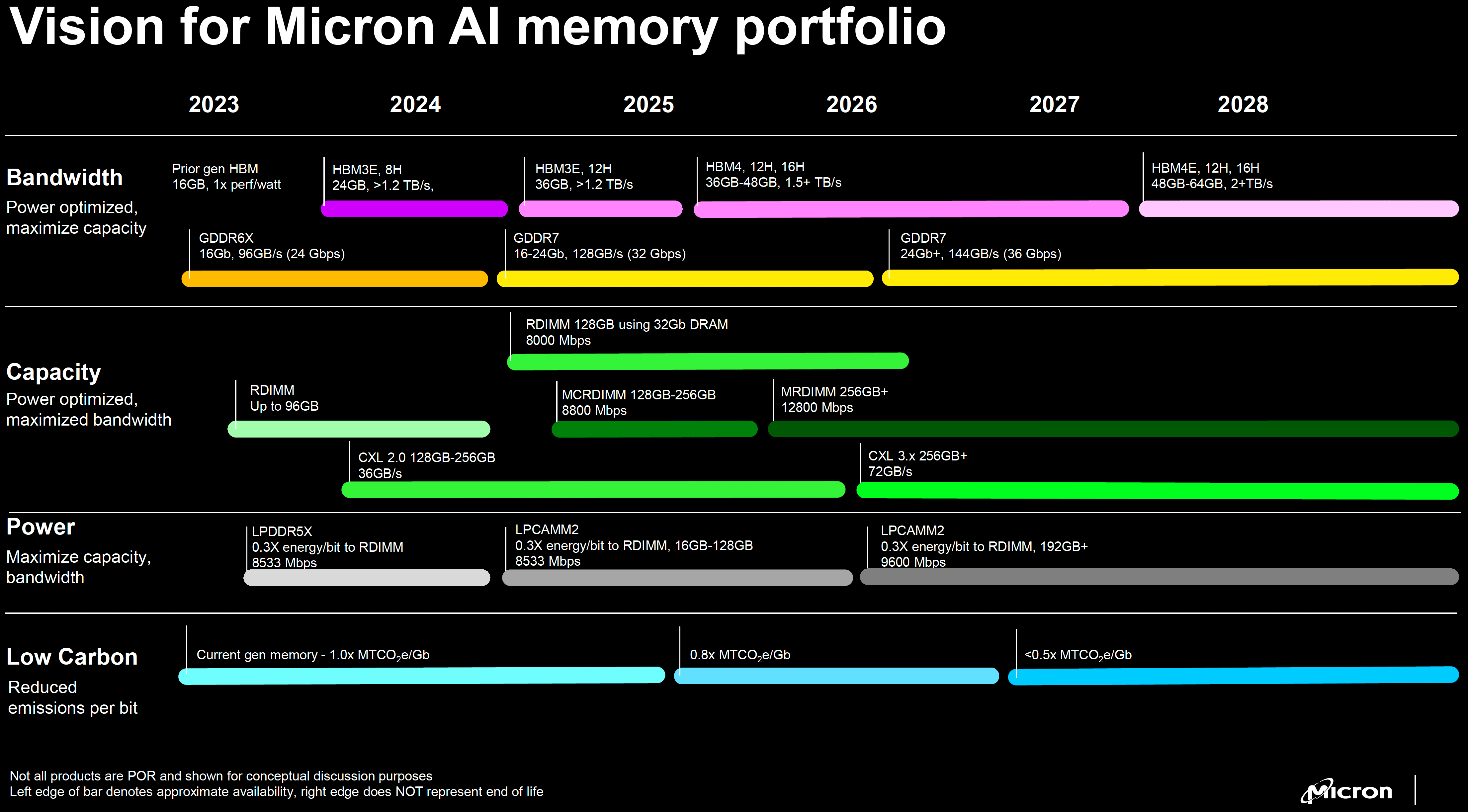

This week, Micron provided an update on its HBM4 and HBM4E programs. The next-generation HBM4 memory, with a 2048-bit interface, is on track for mass production in calendar year 2026, with HBM4E to follow in subsequent years. In addition to offering higher data transfer rates than HBM4, HBM4E will also introduce an option to customize its base die, marking a paradigm shift for the industry.

Without a doubt, HBM4 impresses with its 2048-bit memory interface. However, HBM4E will be even more impressive, enabling Micron to offer customized base dies for certain customers, thus providing more optimized solutions with potentially additional features. The custom logic dies are set to be made by TSMC using an advanced node, allowing them to pack more caches and logic for added performance and functionality.

“HBM4E will introduce a paradigm shift in the memory business by incorporating an option to customize the logic base die for certain customers using an advanced logic foundry manufacturing process from TSMC,” said Sanjay Mehrotra, President and Chief Executive Officer of Micron. “We expect this customization capability to drive improved financial performance for Micron.”

For now, we can only wonder how Micron plans to customize its HBM4E base logic dies. Still, the list of possibilities is long and includes basic processing capabilities such as enhanced caches, custom interface protocols tailored for particular applications (AI, HPC, networking, etc.), memory-to-memory transfer capabilities, variable interface widths, advanced voltage scaling and power gating, and custom ECC and/or security algorithms. Keep in mind that this is speculative. At this point, it remains unclear whether an actual JEDEC standard supports such customizations.

Micron says that development work on HBM4E products is well underway with multiple customers, so we can expect different clients to adopt base dies with different configurations. This marks a step toward customized memory solutions for bandwidth-hungry AI, HPC, networking, and other applications. How customized HBM4E solutions will stack up against Marvell’s custom HBM (cHBM4) solutions introduced earlier this month remains to be seen.

Micron’s HBM4 will use the company’s DRAMs made on its proven 1β (5th Generation 10nm-class process technology), placed on top of a base die featuring a 2048-bit wide interface and a data transfer rate of around 6.4 GT/s, which will provide a peak theoretical bandwidth of 1.64 TB/s per stack. Micron plans to ramp HBM4 production in high volume in calendar year 2026, which aligns with the launch of Nvidia’s Vera Rubin and AMD’s Instinct MI400-series GPUs for AI and HPC applications. Interestingly, both Samsung and SK Hynix are rumored to use 6th Generation 10nm-class manufacturing technology for their HBM4 products.

Micron also revealed this week that shipments of its 8-Hi HBM3E devices for Nvidia’s Blackwell processors are already in full swing. The company’s 12-Hi HBM3E stacks are being tested by its leading customers, who are reportedly satisfied with the results.

“We continue to receive positive feedback from our leading customers for Micron’s HBM3E 12-Hi stacks, which feature best-in-class power consumption—20% lower than the competition’s HBM3E 8-Hi—even as the Micron product delivers 50% higher memory capacity and industry-leading performance,” said Mehrotra.

12-Hi HBM3E stacks are expected to be used by AMD’s Instinct MI325X and MI355X accelerators, as well as Nvidia’s Blackwell B300-series compute GPUs for AI and HPC workloads.

Read full post on Tom’s Hardware

Discover more from Technical Master - Gadgets Reviews, Guides and Gaming News

Subscribe to get the latest posts sent to your email.